By the end of the next decade, in a future dominated by networks, connections and collaboration, analytical instruments will look similar to those of today. But they will offer an experience that is far beyond that to which we have become accustomed. In the future, instruments will be fully enabled for access anywhere at anytime, with the proper authorisation. As instruments collect data, they buffer it locally but then store it either in an enterprise-level informatics system belonging to a large organisation, such as a pharmaceutical company, or in the internet cloud if the data is from a smaller organisation, such as a university laboratory.

Chromatography data systems (CDS), electronic lab notebooks (ELN) and enterprise content management (ECM) systems will be scalable and tightly coupled to each other. Informatics architecture will be completely open and vendors will cooperate to build out an ecosystem of partners providing a range of useful applications that one manufacturer alone could not provide. New collaboration services will enable researchers across global workgroups to access new data immediately as their software rapidly transforms, integrates and visualises the data into information and insight, thus facilitating long sought after progress in systems biology and predictive analyses. For both large and small organisations, this future promises to be remarkably exciting, but how as an industry can we get there?

Lab computing evolution

Yesterday’s laboratory information managementarchitecture was based on a one-to-one relationship between laboratory instruments and the data systems that controlled them. These data systems could be networked, but were not designed to take full advantage of a networked environment. As a result, each data system was its own island of data. Because administration of these systems was largely performed workstation-by-workstation by lab personnel, it was expensive and time-consuming.

The advent of good laboratory practices (GLP) and regulatory compliance, as well as security concerns, were major drivers of change. Who in the lab was approved to develop and change methods? Was the correct version of instrument software used? Corporations realised they could no longer treat the laboratory as an island and drove to align it with in their centralised IT management processes standards.

Today, instrument manufacturers, like Agilent Technologies, have responded with network-ready, standardised systems that can be scaled from the desktop to the workgroup as well as to the entire enterprise. These systems enable centralised administration, leveraged infrastructure, improved security, easier regulatory compliance, lower costs, and to an extent, data sharing across the enterprise. For example, with introduction of shared services, we can now deliver benefits such as single log-on; the ability to group projects; and user identity-based permissions. Access is controlled and the systems are secure, while processes can now be distributed across multiple workstations.

When advanced reporting tools are coupled to the shared services environment, users can perform and automate cross-sample, cross-batch reporting. Chromatography systems can now be integrated with ECM systems so there is an underlying archival and retrieval system that makes it possible for organisations to organise, share and thus raise the value of their data.

Information integration

Today’s systems offer significant improvements, compared with those of 10 years ago, but substantial gaps remain. A major bottleneck in many organisations is the management, interpretation and archiving of the growing amount of data generated from a variety of analytical instruments. Increasinglycomplex and large data files – measured in petabytes instead of megabytes, gigabytes or terabytes – in multiple formats – must be interpreted and archived, and this trend shows no signs of slowing. The number of isolated pools of systems and data is growing too and there aren’t standards that allow data integration and sharing among different analytical instruments and techniques.

In the life sciences, data from multiple ‘omics’ disciplines must be integrated to make progress in achieving key industry initiatives. Organisations across the globe now pursue predictive analyses driven by systems biology as a way to solve key challenges in agriculture and healthcare. With the growth in systems biology approaches, complex data from multiple, interdisciplinary sources around the globe must be integrated, analysed, correlated and visualised, increasing the demand for fast, unconstricted, yet secure access. Though global collaborations are commonplace, few of today’s informatics tools provide a truly collaborative environment.

Easy collaboration – ‘collaboration services’ – is what future-facing organisations are investing in. To enable collaboration, manufacturers must develop and implement standards and open systems. Many collaborative research teams are globally distributed and use many different systems with many different functions in many different languages and interfaces. The informatics environment of the future must provide a common foundation by which distributed users can integrate their various expertise and perspectives in various languages.

New possibilities

New technologies are driving progress, particularly for organisations unable to invest in a large informatics infrastructure. With the advent of web services and cloud computing, informatics providers can supply economical solutions to smaller organisations. Cloud computing, a relatively new delivery model for IT and business applications, is made possible by remote computing over the internet. Users access applications through their web browser as if they were installed on their own computer and do not need to have expertise in or control over the infrastructure that supports them. Application software and data are stored on servers managed by the provider.

Organisations can access software in the internet cloud in the form of web-based services. The pharmaceutical industry has been at the forefront of this trend. Web services extend users’ working environment into their community of collaborators, or allow them to broker their services across a larger community of interest, without the overhead of a central IT organisation and a large data centre.

Web services-based architecture has other advantages. The internet browser becomes the user interface. Because it is over the internet, users can take advantage of anytime, anywhere access and remote services, and can distribute workflows across their workgroup. Laboratories often start sample runs and return the next day to find that not all samples ran successfully. Imagine the productivity gain if the analyst received a smart alert when a run encountered a problem so s/he could decide whether to go back into the lab and intervene?

But cloud computing is not without controversy. Cloud computing applications vary in the level of security supplied. Organisations are asking: are the servers physically secured? Could my data be stolen? Those trying this approach will need to put serious thought into the level of security needed.

Other technologies that promise to facilitate the development of collaborative informatics are natural language processing and semantic web tools. Natural language processing is a field of computer science focused on converting computer data into human languages and images and vice versa. The goal of semantic web tools is to make web-based information understandable by computers, so computers can perform the tedious work of finding and integrating information across applications and systems.

Figure 1 illustrates the evolution of laboratory computing and informatics in terms of the greater level of capabilities that modern IT systems are now starting to offer.

Standards and open systems

Informatics suppliers can help to advance laboratory computing in a number of ways. The development and implementation of industry standards is the critical first step. There is a fundamental need for a standard format to enable data integration and collaboration across communities of users in a straightforward and easy way. The current trend is an XML format, but the industry has not standardised on a specific one, and it is not clear that this format will be adequate across all of the tools and disciplines that must be integrated in a systems biology approach. Although it has been difficult for manufacturers to determine the data structure that works for all of the various analytical instruments that laboratories use, forwardthinking informatics suppliers have begun to work toward solutions.

In addition to standards, manufacturers need to provide open instrument control. To do their work, laboratories must use a variety of analytical instruments from a variety of vendors. Laboratories also need standard operating procedures (SOPS) that enable them to work across all instrument types. Manufacturers like Agilent now provide an open instrument control framework (ICF) enabling laboratories to control their instruments from one standard operating system and ultimately to develop lab-wide rather than instrument specific SOPs. The open ICF also makes it easier for third party software developers to develop supporting products.

Laboratories shouldn’t need to use a unique reporting engine for each vendor’s instruments. Today, when the same experiment is run on two different vendors’ systems, laboratory managers have to review two different sets of reports. They waste time determining what’s different because of the reporting, versus what’s different between the samples. Manufacturers need to provide a single reporting engine that is able to function across instrument types and vendors.

Collaboration services

While standards and open systems provide the foundation for collaboration, organisations are fundamentally interested in purchasing services that facilitate collaboration, leverage experts across their organisation, and help them extract value from huge amounts of data – all while protecting their intellectual property. Agilent’s OpenLAB, for example, is an operating system for the laboratory that integrates analytical instrument control and data analysis, enterprise content management and laboratory business process management into a single, scaleable web-based system. Agilent’s Electronic Lab Notebook is an open, scalable, integrated platform for creating, managing, sharing and protecting data in a complex global environment. It is available in Chinese and Korean, enabling researchers to collaborate easily in their own languages and local requirements.

Though the accelerating pace of ELN adoption across the R&D landscape is helping organisations improve collaboration, data accessibility and knowledge retention, manufacturers still need to go further in developing tools that present data in ways that it can be understood and interpreted by a variety of disciplines and languages. Advances in data reduction, visualisation, modeling and simulation are desperately needed to help find the few pieces of data that truly make a difference among the petabytes available.

Faster decision-making

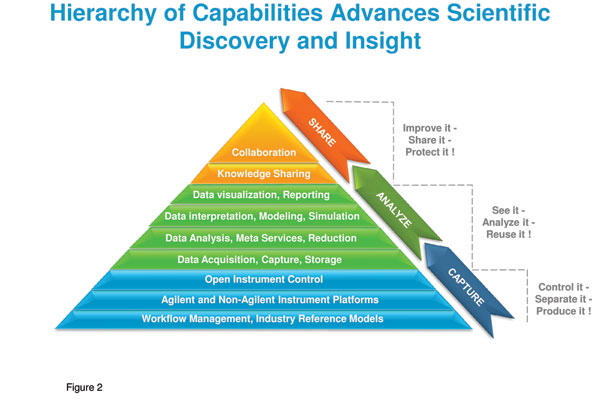

Organisations require a hierarchy of capability that has as its base laboratory instrument control and data collection. Today, manufacturers have refocused product enhancements on data analysis, interpretation, visualisation and reporting. Though instrument control, acquisition and data interpretation is fundamental to analytical work, organisations want to make decisions and solve scientific problems faster. To do this requires a higher level of capability that integrates public and private data from disparate sources to facilitate knowledge sharing across disciplines and geographies, while protecting their intellectual property.

To deliver this new level of value, informatics suppliers must start by focusing on the development of industry standards and open systems. In turn, these standards and open systems will provide the foundation for breakthroughs in the collaboration technologies and services of the future.

Bruce von Herrmann is vice president and general manager, software and informatics at Agilent Technologies, based in Santa Clara, California, US.